This Open Source Tool Saves Me Thousands of Dollars

Jonas Scholz

Jonas ScholzI used to pay 30 dollars per month (per user!) for ChatGPT. Then I realized I could self-host the UI and save thousands 🤑

I replaced it all with a self-hosted setup using Open WebUI, and it is now saving me thousands of dollars a year across sliplane.io and side projects.

What is Open WebUI?

It is an open source, offline-first interface for local and remote LLMs. You can hook it up to:

- Ollama for local models like LLaMA, Mistral, or Phi-3

- OpenAI-compatible APIs like Mistral, Together, Groq, LM Studio, or LocalAI (and obviously OpenAI itself)

- Built-in RAG support with local files or vector databases

- A modern interface that feels very close to ChatGPT

You get most of ChatGPT without the recurring bill, and with (nearly) full control.

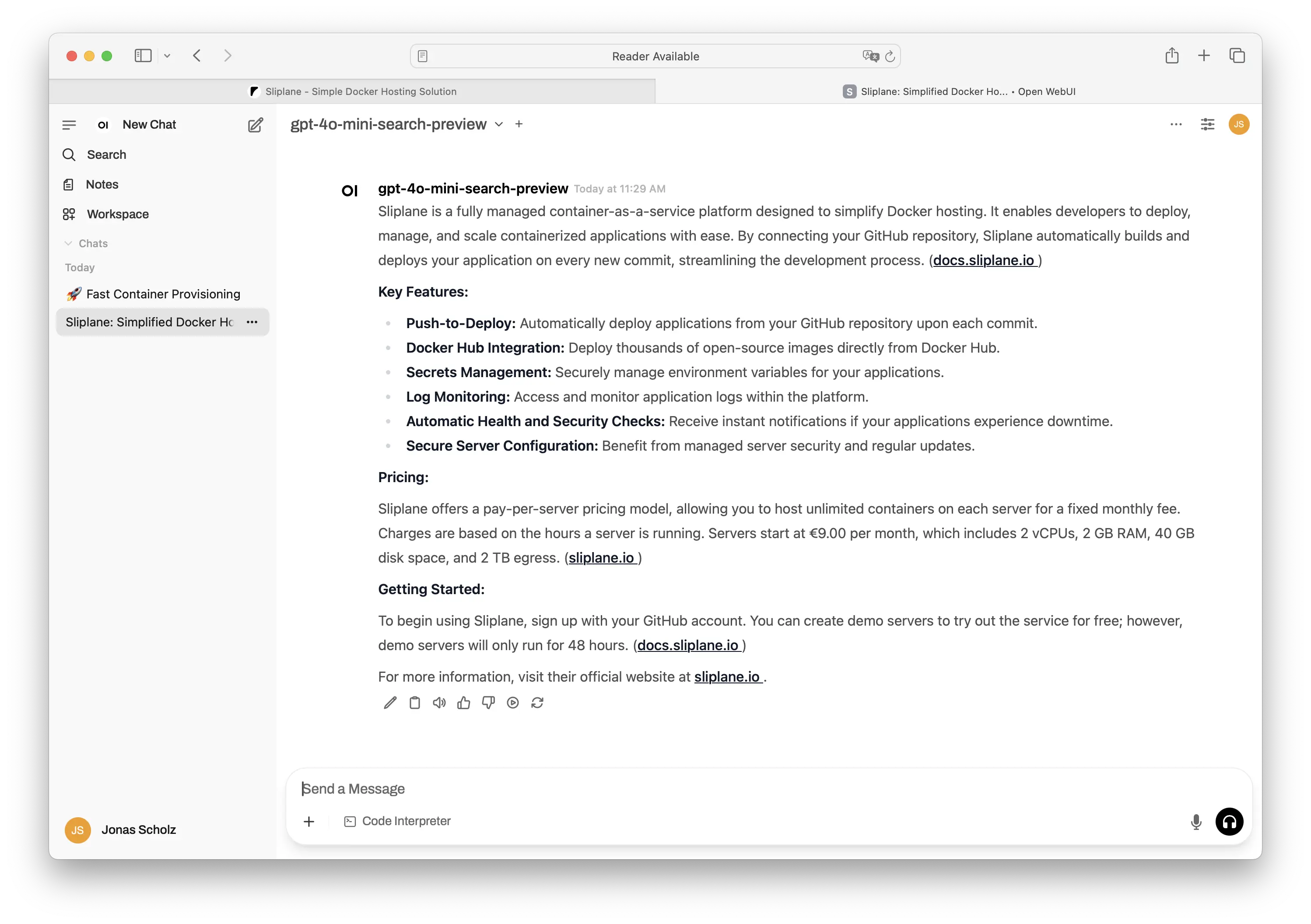

Here is what Open WebUI looks like in action:

The clean and familiar chat interface

The clean and familiar chat interface

Easy member management and settings

Easy member management and settings

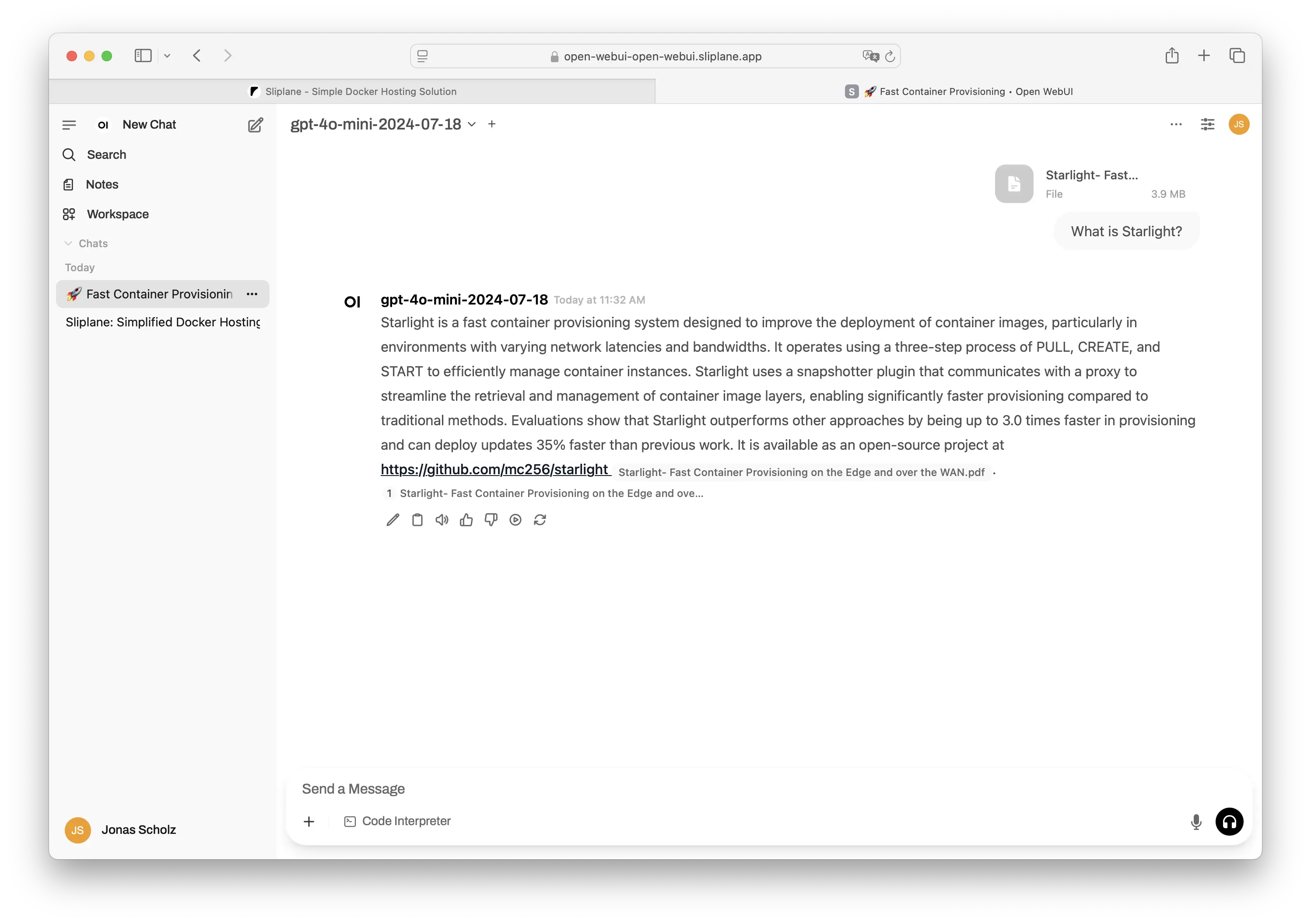

Built-in RAG (Retrieval Augmented Generation) support

Built-in RAG (Retrieval Augmented Generation) support

Why I Switched

I used to pay for the ChatGPT Team plan. Thirty dollars per user per month. That adds up quickly if you are running a business or even a small team.

The truth is we did not need most of the Team features. We just wanted a clean interface, conversation history, and reliable model access. Open WebUI gave us exactly that, and for free.

My Setup

- Open WebUI hosted on Sliplane

- OpenAI API key for running models remotely (or locally with Ollama)

It runs in my existing infra, feels almost as good, and gives me more flexibility. You can of course run it on any server you want, I of course am slightly biased and will always use Sliplane :)

Run It with Docker

If you are just getting started, you can run it with a single command:

docker run -d -p 3003:8080 \

-e OPENAI_API_KEY=your_secret_key \

-v open-webui:/app/backend/data \

--name open-webui \

--restart always \

ghcr.io/open-webui/open-webui:main

Or use Docker Compose:

services:

open-webui:

image: ghcr.io/open-webui/open-webui:main

container_name: open-webui

ports:

- "3000:8080"

environment:

- OPENAI_API_KEY=your_secret_key

volumes:

- open-webui:/app/backend/data

restart: always

volumes:

open-webui:

Then open http://localhost:3000 in your browser.

Tradeoffs

Open WebUI gets surprisingly close to ChatGPT in features, but there are still some tradeoffs:

- You manage the backend You choose the model (OpenAI, Mistral, Ollama, etc.), which means you also manage latency, availability, and cost. Great flexibility, but more responsibility.

- Not everything is as seamless While it has browsing and a code interpreter, they’re not always as tightly integrated or polished as in ChatGPT. Some tools require extra setup (like file system access or external APIs).

- Self-hosting comes with overhead You’ll need to manage updates, API keys, and occasional debugging. It’s low maintenance once running, but still something to monitor.

- Team features are basic It supports multiple users and conversations, but lacks more advanced enterprise features like role-based access, usage analytics, or granular billing (not super deep into the enterprise stuff but I am sure it is not as advanced as ChatGPT).

If you're okay with a little tinkering, Open WebUI gives you a huge amount of value and control in return.

Deploy It on Sliplane

Want the fastest way to get started without touching a server?

You can deploy Open WebUI on Sliplane in under 2 minutes:

- One click deploy

- HTTPS out of the box

- Secrets management built in

- Backups included

- Runs on European infrastructure

The Savings

Here is what the cost difference looked like for me:

| Tool | Monthly cost per user | Users | Yearly cost |

|---|---|---|---|

| ChatGPT Team | 30 | 3 | 1080 |

| Open WebUI | 3-5 | unlimited | 108 |

| API | pay per use | shared | ~240 |

The cost for Open WebUI depends on the hosting you use, I put it on a Base Sliplane server, which costs 9 Euros per month. Assuming you don't have a team of 100 users that all use it concurrently this will be plenty of performance.

You then also pay for the API calls which depends on the model you use and the amount of requests you make, but I can guarantee you most users will never hit 30 dollars a month in usage :)

Final Thoughts

If you are

- Spending too much on AI tools

- Comfortable running Docker

- Fine with a few rough edges

Then self-hosting Open WebUI is one of the easiest wins out there. Combine it with a good API backend like Mistral or run models locally with Ollama, and you get most of what ChatGPT offers for a fraction of the cost.

It saved me thousands. Maybe it will do the same for you.

Cheers,

Jonas, Co-Founder of Sliplane