5 Awesome Ollama Alternatives You Should Know

Jonas Scholz

Jonas ScholzOllama has become one of the most popular solutions for running Large Language Models (LLMs) locally. With its simple CLI and straightforward setup, it's the go-to choice for many developers. But what if you're looking for something different? Maybe you need a graphical interface, more flexibility, or even cloud-based solutions?

In this article, I'll introduce you to 5 awesome alternatives to Ollama, each with their own unique strengths. Whether you're a beginner or a pro, you'll definitely find the right solution for your needs.

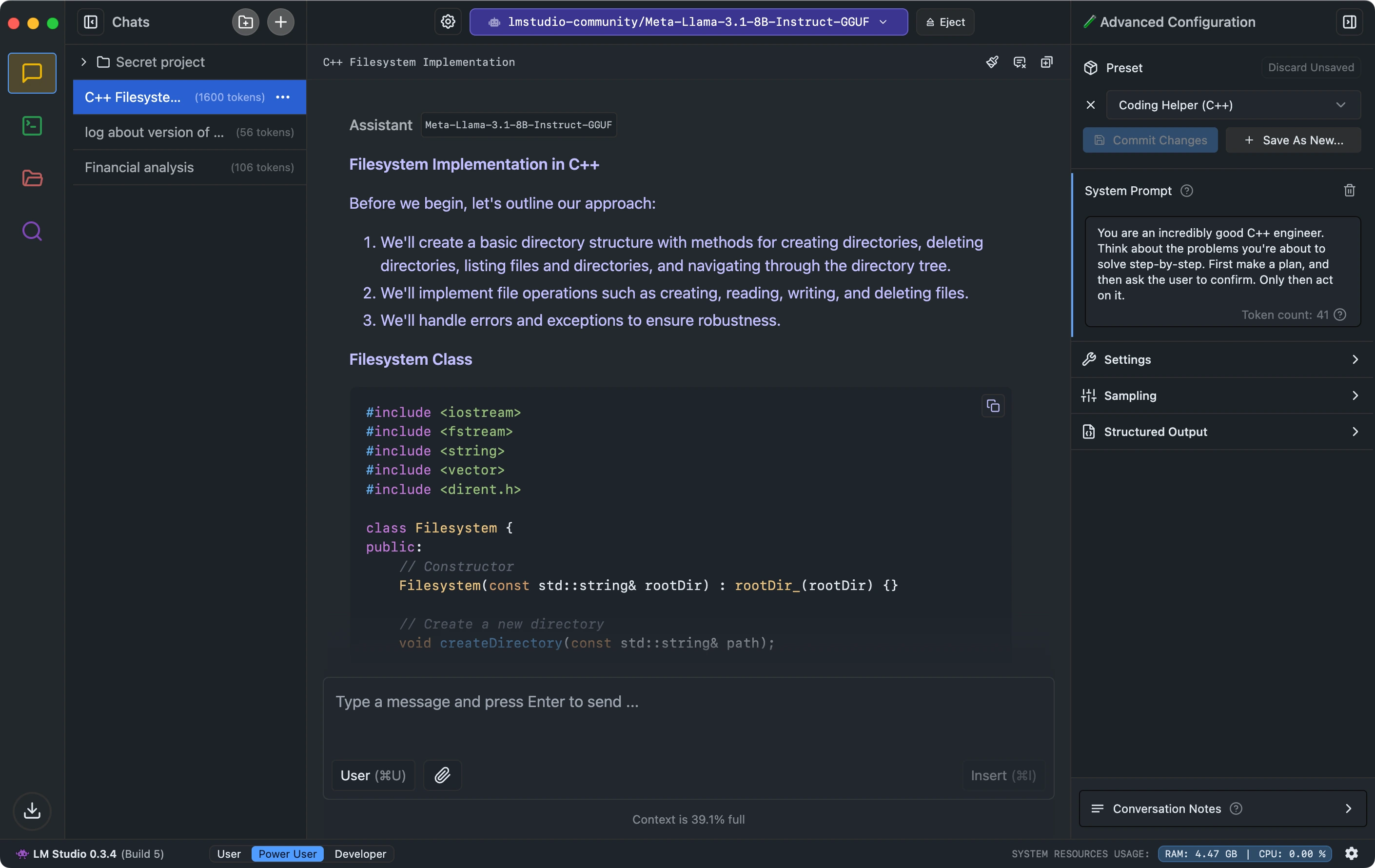

1. LM Studio - The User-Friendly Desktop App

LM Studio is perfect for anyone who prefers a graphical interface. This desktop application makes working with LLMs easier than ever before.

What Makes LM Studio Special?

- Intuitive GUI: No command line needed - everything runs through a modern desktop app

- Built-in Model Browser: Search and download models directly from Hugging Face

- RAG Support: Drag and drop PDFs or text files into the app and ask questions about them

- OpenAI-compatible API: Use LM Studio as a drop-in replacement for the OpenAI API

Installation and Hardware

Installation couldn't be simpler: Download the installer for Windows, macOS, or Linux and install the app like any other software. For optimal performance, I recommend at least 16GB RAM and a dedicated GPU.

Who Is LM Studio Ideal For?

LM Studio is particularly suitable for:

- Beginners who want to work with LLMs without technical knowledge

- Teams that value privacy and need to process sensitive data locally

- Windows users looking for a native desktop experience

The biggest difference from Ollama? LM Studio offers a complete GUI with visual controls, while Ollama is primarily operated via command line. However, LM Studio is also more resource-hungry and less transparent as closed-source software.

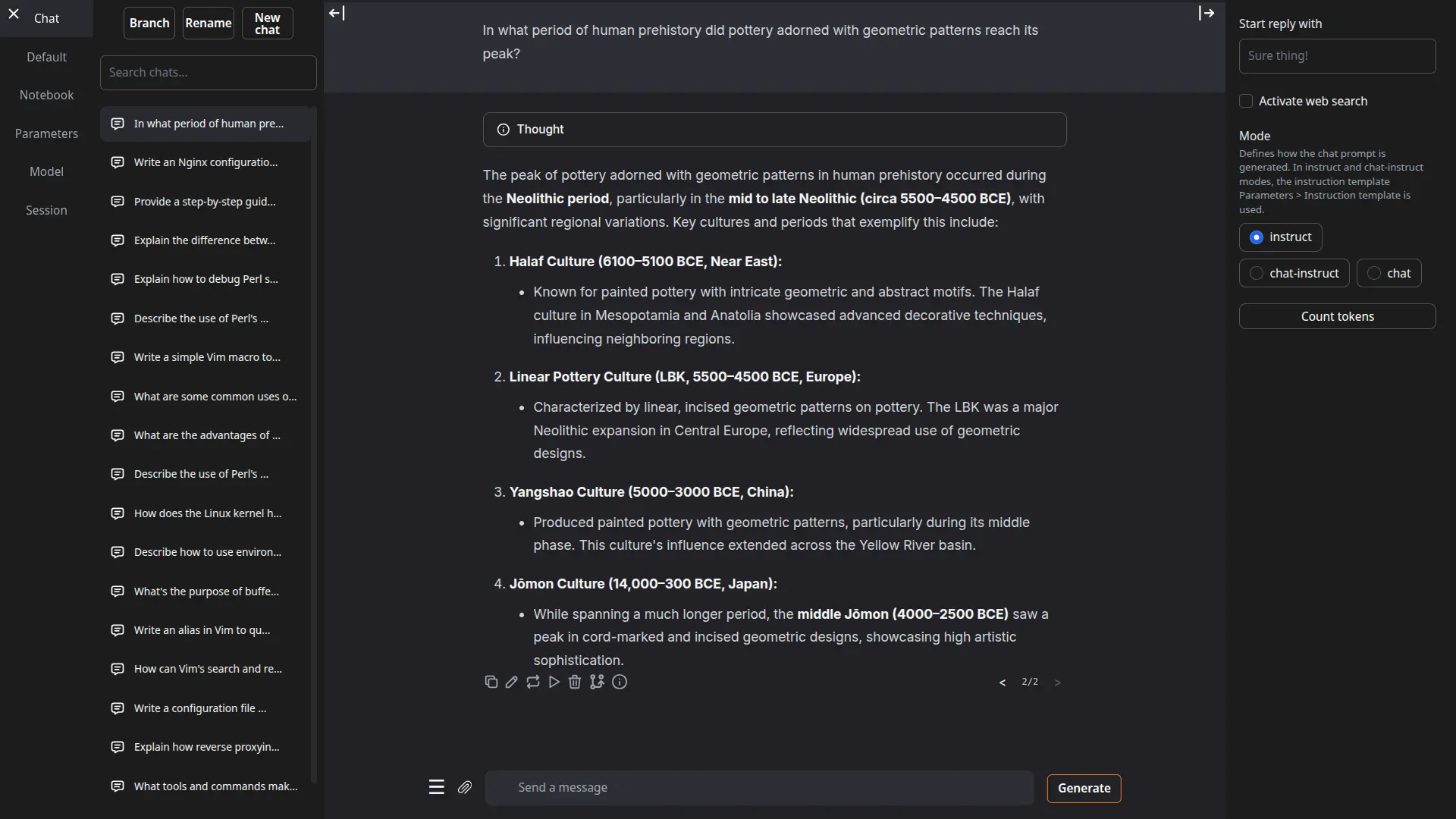

2. Text-Generation-WebUI (Oobabooga) - The Swiss Army Knife

Text-Generation-WebUI is the ultimate playground for LLM enthusiasts. This open-source web application offers incredible flexibility and extensibility.

The Killer Features

- Multi-format support: Load models in GGUF, GPTQ, AWQ, and many other formats

- Extensible through plugins: From speech-to-text to translations - everything's possible

- Character system: Create your own personas and role-playing scenarios

- Built-in RAG functionality: Load documents and ask contextual questions

Setup and Usage

You have two options:

- Easy Mode: Download the portable archive and start with one click

- Developer Mode: Install via pip/conda for maximum control

After starting, you can access the interface at http://localhost:7860.

Perfect for Power Users

Text-Generation-WebUI is ideal if you:

- Love experimenting and want to test different models

- Want full control over generation parameters

- Pursue creative use cases like storytelling or role-playing

Compared to Ollama, the WebUI offers significantly more features and customization options, but also requires a bit more technical understanding during setup.

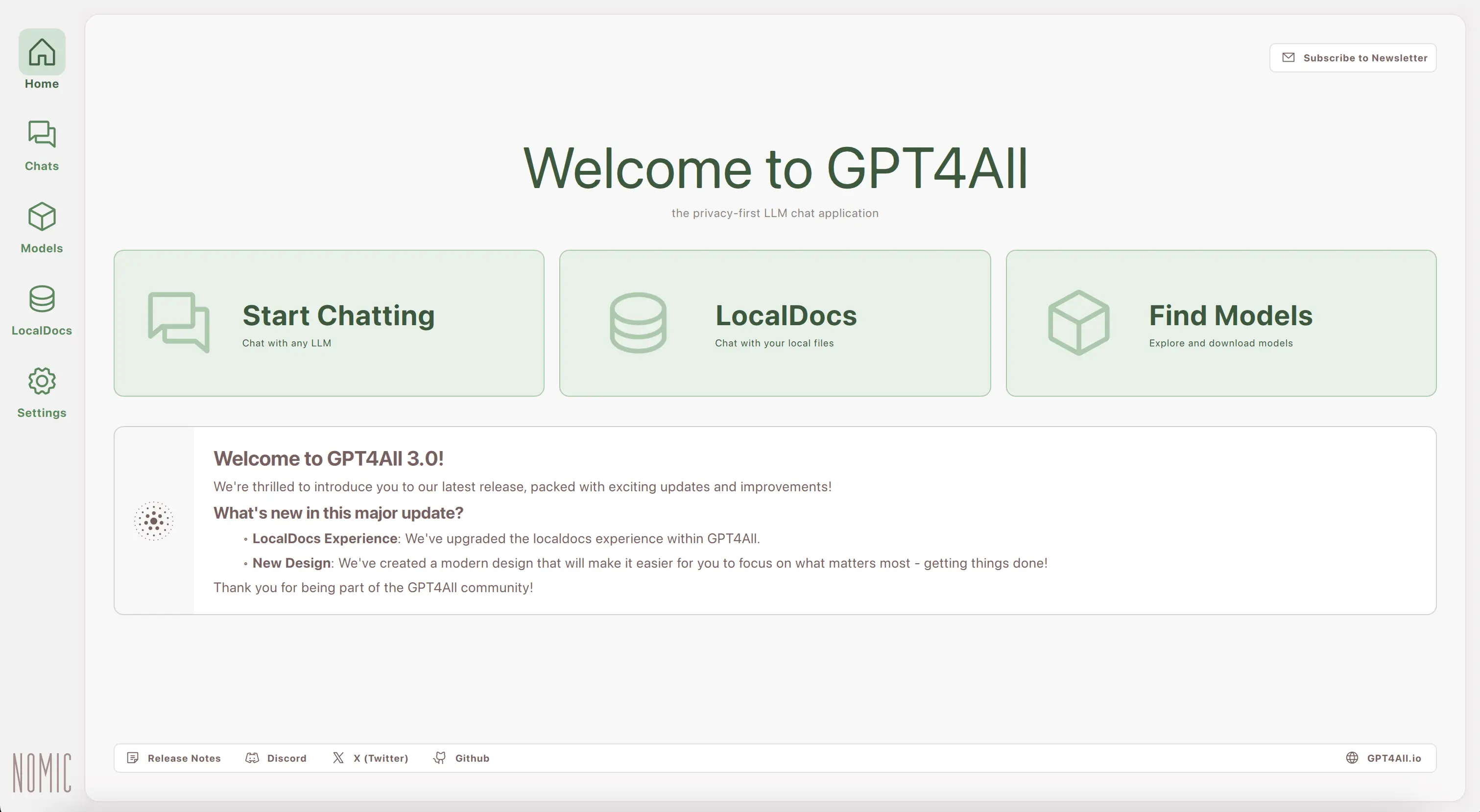

3. GPT4All - The Privacy Champion

GPT4All by Nomic AI is the perfect choice for anyone who wants to combine maximum privacy with user-friendliness.

What Sets GPT4All Apart

- 1000+ available models: The largest selection of pre-trained models

- LocalDocs: Analyze your local documents completely offline

- Optimized for consumer hardware: Runs surprisingly well even on older laptops

- Zero-config setup: Installation and start in under 5 minutes

Hardware Requirements

GPT4All is particularly hardware-friendly:

- Runs on CPU-only systems

- Makes optimal use of Apple Silicon

- GPU acceleration optionally available

The Target Audience

GPT4All is perfect for:

- Privacy-conscious users who never want to send their data to the cloud

- Casual users looking for a personal AI assistant

- Educational institutions that want to provide AI tools cost-effectively

The main difference from Ollama lies in the out-of-the-box experience: GPT4All comes with a ready-made chat GUI and is immediately ready to use, while Ollama focuses more on developer integration.

4. LocalAI - The OpenAI-Compatible API

LocalAI is the answer for anyone who needs a self-hosted AI API. It acts as a drop-in replacement for the OpenAI API.

The Technical Highlights

- 100% OpenAI API compatible: Just change the base URL in your apps

- Multi-modal: Supports text, images, and audio in one instance

- Scalable: Docker/Kubernetes-ready for enterprise deployments

- Modularly extensible: With LocalAGI and LocalRecall for complex AI systems

Deployment Options

# Quick Start with Docker

docker run -p 8080:8080 localai/localai:latest

Or use the binaries for installation without containers.

Ideal for Developers and Enterprises

LocalAI shines when you:

- Want to migrate existing OpenAI integrations to local infrastructure

- Need multi-tenancy and scaling

- Want to unite different AI modalities under one roof

In contrast to Ollama's CLI focus, LocalAI is designed from the ground up as an API server. It offers more flexibility for complex deployments but also requires more technical know-how.

5. OpenAI API - The Cloud Solution

Yes, the OpenAI API is the exact opposite of local hosting - but sometimes it's still the best choice.

The Advantages of the Cloud

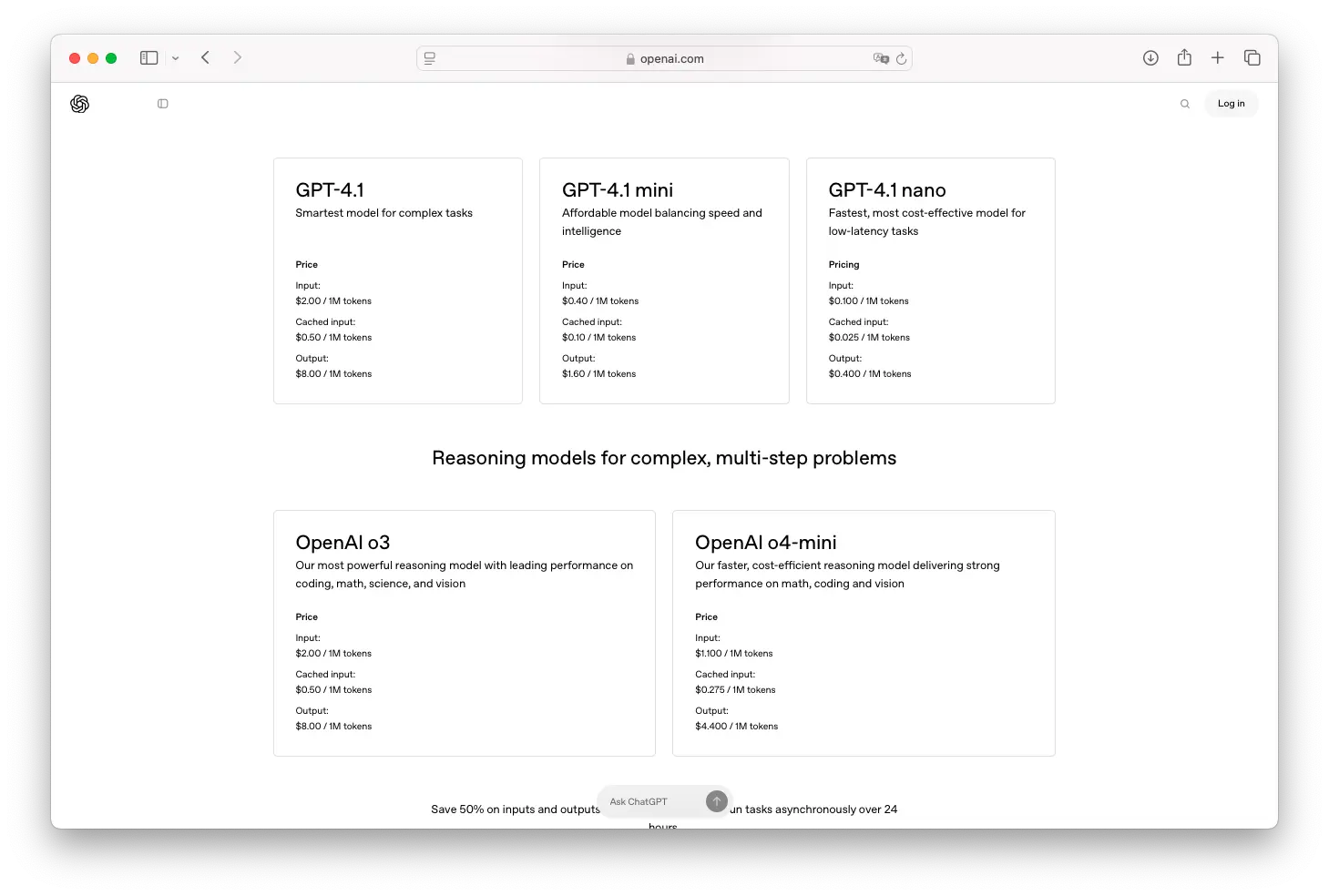

- State-of-the-art models: GPT-4 and other top models

- Zero infrastructure: No hardware investments needed

- Ready to use immediately: Generate API key and get started

- World-class performance: Optimized inference with minimal latency

Costs and Privacy

- Pay-per-use: You only pay for what you use

- Privacy considerations: Your data leaves your infrastructure

- Compliance: Check if cloud AI is compatible with your requirements

When OpenAI Makes Sense

The OpenAI API is optimal for:

- Prototyping: Quickly test ideas without setup

- Sporadic use: When local hardware isn't worth it

- Highest quality: When only the best model is good enough

The fundamental difference from Ollama and all other local solutions: You trade control for convenience. No installation, no maintenance - but also no data sovereignty.

Which Alternative Is Right for You?

Here's a quick decision guide:

- Need a GUI? → LM Studio or GPT4All

- Want maximum flexibility? → Text-Generation-WebUI

- Developing an app? → LocalAI or OpenAI API

- Is privacy critical? → All local solutions (1-4)

- Need best performance? → OpenAI API

Conclusion

Ollama is and remains a fantastic solution for running LLMs locally. But as you can see, there are suitable alternatives for every use case. From user-friendly desktop apps to flexible web interfaces to scalable API servers - the selection has never been greater.

My tip: Try out different tools! Most are free and quick to install. This way you'll find the solution that perfectly fits your requirements.

Which Ollama alternative do you use? Let me know in the comments!

If you're building AI applications that need a chat interface, check out our guide on Open WebUI alternatives. For deployment options, our cheap ways to deploy Docker containers guide can help you choose the right hosting platform.